In the rapidly evolving landscape of artificial intelligence, where innovation moves at the speed of light, Fireworks.ai stands out as a beacon for developers and enterprises seeking high-performance generative AI solutions. As we dive into 2025, the demand for efficient, scalable, and cost-effective AI infrastructure has never been higher. Fireworks.ai products services have emerged as a game-changer, offering the fastest inference engine for open-source large language models (LLMs) and multimodal AI. This article explores the essential Fireworks.ai products services you should know about in 2025, from blazing-fast model deployment to advanced fine-tuning capabilities. Whether you’re a startup prototyping the next big app or a Fortune 500 company scaling AI across global operations, understanding these offerings will position you at the forefront of AI adoption.

Fireworks.ai, founded in 2022 and headquartered in Redwood City, California, has grown exponentially, powering over 10,000 developers and major players like Notion, Quora, and Sourcegraph. By leveraging a globally distributed cloud infrastructure, Fireworks.ai products services deliver sub-second latency and up to 120x cost reductions compared to traditional setups. In 2025, with the rise of edge computing and multimodal AI, these services are more relevant than ever, enabling real-time applications in everything from code completion to visual intelligence.

The Core of Fireworks.ai Products Services: Ultra-Fast Inference Engine

At the heart of Fireworks.ai products services is their proprietary inference engine, optimized for speed, throughput, and efficiency. Unlike legacy cloud providers that require cumbersome GPU provisioning, Fireworks.ai allows users to run state-of-the-art open-source models—like Llama 3.1, Mistral, and Stable Diffusion XL—with just one line of code. This serverless architecture means no infrastructure management headaches; auto-scaling GPUs handle spikes in demand effortlessly.

In 2025, the inference engine has been supercharged with FireAttention, a serving stack that pushes boundaries in compound AI systems. Developers report latency drops from seconds to milliseconds, crucial for latency-sensitive apps like chatbots and recommendation engines. For instance, Notion reduced response times from 2 seconds to 350 milliseconds, enhancing user experience dramatically. Fireworks.ai products services extend this to multimodal tasks, supporting text-to-image generation and vision-language models at scales previously unimaginable.

What sets this apart? Quantization-aware tuning and adaptive speculation techniques ensure models maintain quality while slashing compute costs. As AI models balloon in size—think 405B parameter behemoths—Fireworks.ai products services make them accessible without breaking the bank. Enterprises can bring their own cloud (BYOC) for added control, integrating seamlessly with AWS, Google Cloud, or Azure.

Fine-Tuning and Model Lifecycle Management: Empowering Customization

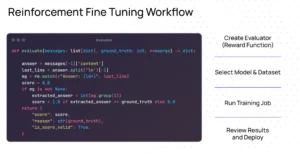

One of the most exciting aspects of Fireworks.ai products services in 2025 is the Fireworks Rapid Fine-Tuning (RFT) platform. Gone are the days of weeks-long fine-tuning cycles; RFT leverages reinforcement learning from human feedback (RLHF) and low-rank adaptations to outperform even frontier closed models like GPT-4 in domain-specific tasks. Users can upload datasets and deploy custom models in hours, not days, with no additional costs for hosting.

This service shines in use cases like personalized AI assistants. A healthcare provider might fine-tune a model on anonymized patient data for HIPAA-compliant diagnostics, while e-commerce giants customize recommendation engines for niche markets. Fireworks.ai products services include built-in evaluation protocols, ensuring tuned models meet benchmarks for accuracy and safety.

Beyond fine-tuning, the model lifecycle management tools cover the full spectrum: from experimentation in Jupyter notebooks to production deployment via REST APIs. Version control, A/B testing, and rollback features make iteration painless. In a year marked by AI governance regulations, these tools align with SOC2, HIPAA, and GDPR standards, with zero data retention policies safeguarding sensitive information.

Scaling and Security: Enterprise-Grade Fireworks.ai Products Services

Scalability is non-negotiable in 2025’s AI-driven economy, and Fireworks.ai products services excel here. The platform’s global edge network—spanning data centers in North America, Europe, and Asia—delivers low-latency inference worldwide. For high-throughput needs, like processing millions of queries daily, Fireworks.ai offers dedicated clusters with up to 50% higher GPU utilization than competitors.

Security remains a cornerstone. With end-to-end encryption, role-based access controls, and audit logs, enterprises can deploy AI confidently. Recent integrations with tools like Ollama enable on-device LLM execution, perfect for privacy-focused edge deployments in IoT and mobile apps.

Partnerships amplify these services. In October 2025, Fireworks.ai teamed up with AMD to harness Instinct GPUs for next-gen inference, promising even faster speeds for vision models. Another highlight: the launch of Embeddings and Reranking APIs on October 9, 2025, boosting search and retrieval-augmented generation (RAG) applications.

Innovations in 2025: What’s New in Fireworks.ai Products Services

2025 has been a banner year for Fireworks.ai products services, with updates tailored to emerging trends. The Vision Model Platform refresh in June introduced enhanced capabilities for real-time visual intelligence, including image-to-image transformations and ControlNet support for Stable Diffusion models. This empowers creators in AR/VR and autonomous systems.

On October 15, the “LLM on the Edge” initiative integrated Fireworks Eval Protocol with Ollama, allowing developers to run optimized models on laptops or embedded devices. For audio and video, new multimodal endpoints handle transcription and synthesis at scale.

Sustainability is also in focus: Fireworks.ai’s efficient inference reduces carbon footprints by optimizing compute, aligning with green AI mandates. Looking ahead, whispers of quantum-resistant encryption and federated learning integrations hint at even bolder strides.

Infographic outlining key 2025 updates to Fireworks.ai, including partnerships and new APIs.

Real-World Use Cases: Fireworks.ai Products Services in Action

The true power of Fireworks.ai products services reveals itself in diverse applications. Quora leveraged the platform for 3x faster response times in their AI-powered Q&A, handling petabytes of data without downtime. Sourcegraph and Cursor use it for AI coding assistants, where sub-2-second completions accelerate developer productivity by 40%.

In creative industries, Adobe-like tools benefit from SDXL’s turbocharged generation, producing high-res assets in seconds. Financial services firms deploy fine-tuned models for fraud detection, achieving 95% accuracy with minimal false positives. Even nonprofits use it for accessible translation services in underserved regions, scaling to millions of users via cost-effective inference.

These cases underscore a key 2025 trend: democratization of AI. Fireworks.ai products services lower barriers, enabling small teams to compete with tech giants.

Pricing and Accessibility: Getting Started with Fireworks.ai

While exact pricing varies by usage, Fireworks.ai operates on a pay-as-you-go model, with free tiers for prototyping. Expect competitive rates—often 20-120x cheaper than hyperscalers—for inference tokens and fine-tuning compute. Enterprise plans include SLAs for 99.99% uptime and dedicated support.

Getting started is straightforward: Sign up at fireworks.ai, grab an API key, and invoke models via Python or JavaScript SDKs. Comprehensive docs and a vibrant community forum ensure quick onboarding.

Conclusion: Why Fireworks.ai Products Services Matter in 2025

As AI permeates every sector, Fireworks.ai products services emerge as indispensable tools for innovation. From lightning-fast inference to secure, scalable deployments, they empower creators to build the future without constraints. In 2025, as open-source AI matures, platforms like Fireworks.ai will define the next wave of technological advancement. Don’t sleep on these offerings—integrate them today to stay ahead.

FAQ: Frequently Asked Questions About Fireworks.ai Products & Services

Q1: What makes Fireworks.ai’s inference engine faster than competitors? A: Fireworks.ai uses advanced optimizations like FireAttention, quantization, and a global edge network to achieve sub-second latencies and high throughput, outperforming traditional GPU setups by up to 50%.

Q2: Can I fine-tune my own models on Fireworks.ai? A: Yes, the Rapid Fine-Tuning (RFT) service allows custom tuning with RLHF and low-rank adaptations, deployable in hours at no extra hosting cost.

Q3: Is Fireworks.ai suitable for enterprise use? A: Absolutely. It offers SOC2/HIPAA compliance, zero data retention, BYOC options, and auto-scaling for mission-critical workloads.

Q4: What new features arrived in 2025? A: Key updates include AMD GPU partnerships, Embeddings/Reranking APIs, and edge LLM support with Ollama for on-device inference.

Q5: How do I get started with Fireworks.ai products services? A: Visit fireworks.ai, create a free account, and use the API playground to test models instantly.

Recommended Reading

- Fireworks.ai on Medium: Accelerating Code Completion with Fast LLM Inference – A deep dive into developer tools.

- Fireworks.ai on Medium: Raising the Quality Bar with Function Calling – Insights on model enhancements.

- Fireworks AI Overview on Golden Wiki – A concise company profile (closest to Wikipedia-style entry).